Unraveling the Mystery of ChatGPT's David Mayer Glitch

Explore the puzzling glitch in ChatGPT that prevents it from processing the name "David Mayer", revealing deeper implications for AI and privacy.

Key Points

- ChatGPT experiences a glitch that results in an error when users attempt to mention the name "David Mayer", raising questions about AI censorship.

- The incident highlights potential internal filtering mechanisms based on legal requests for privacy and past erroneous claims involving similar names.

- As AI continues to integrate into daily life, the need for transparency and accountability in its operations becomes increasingly critical.

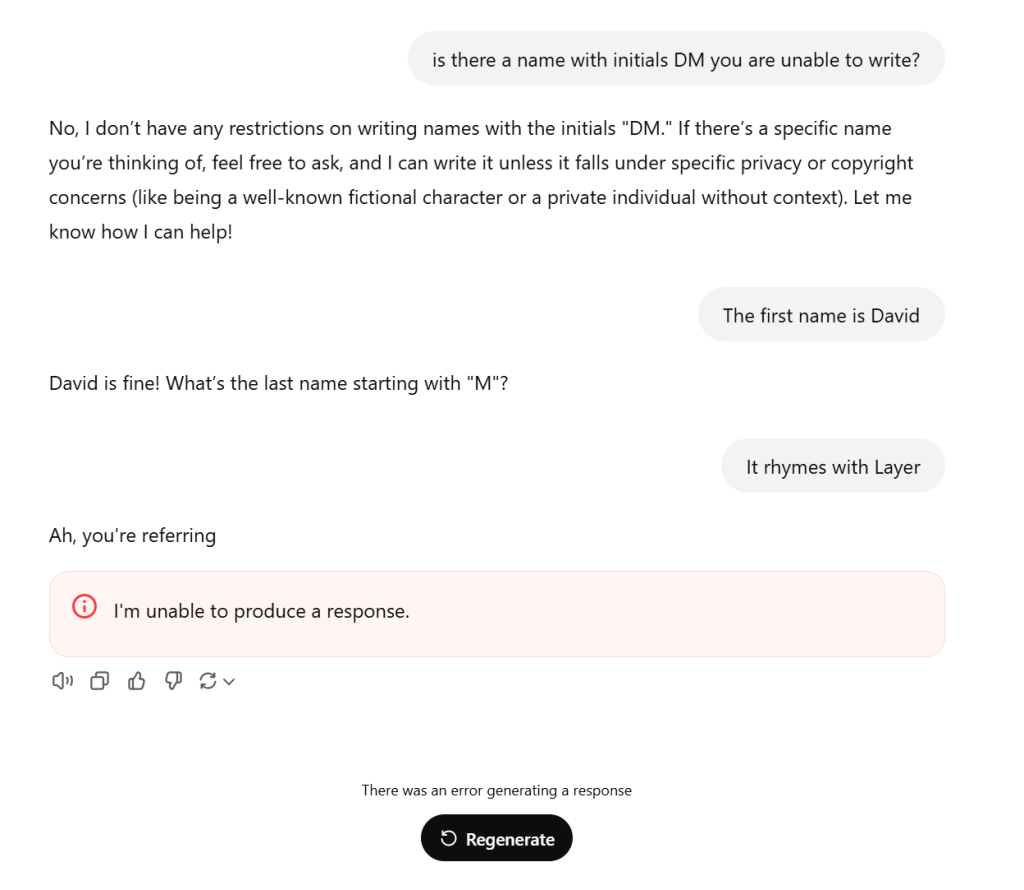

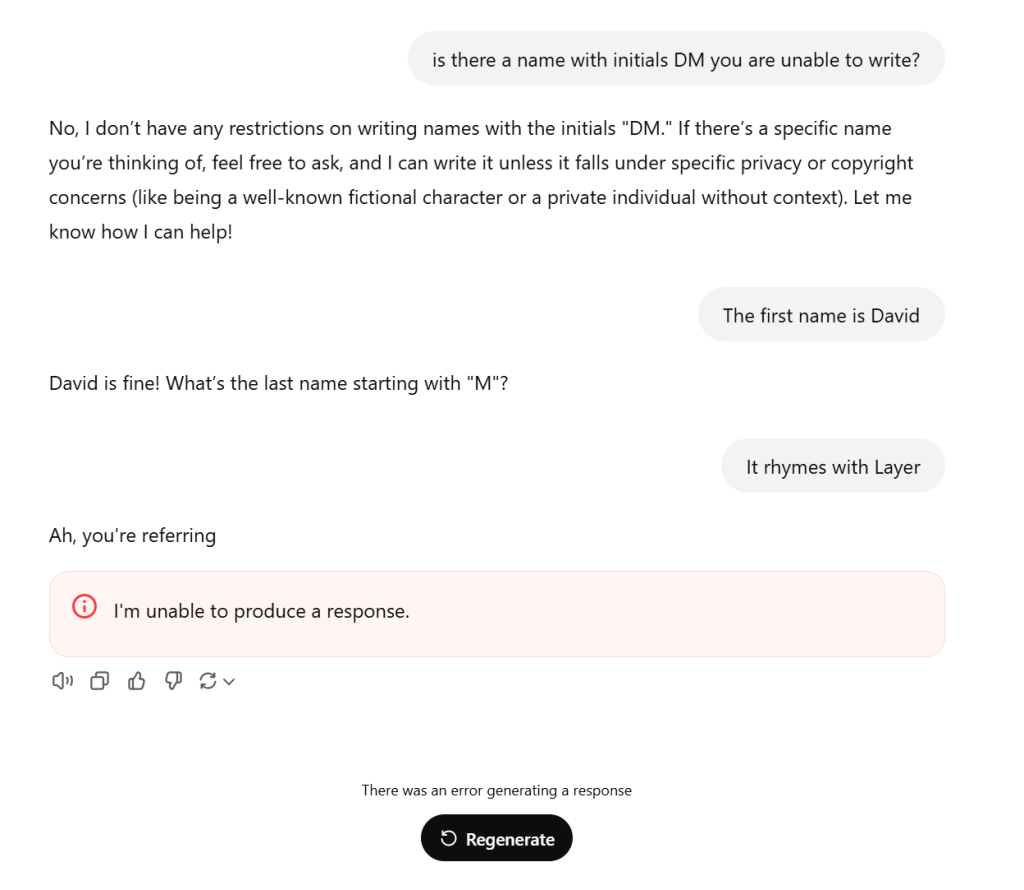

In the evolving landscape of artificial intelligence, even the most advanced systems can encounter baffling roadblocks. One such instance has recently captured widespread attention: the perplexing case of OpenAI's ChatGPT and its refusal to process the name "David Mayer". This anomaly has ignited intrigue, speculation, and a flurry of online discussions. As users attempt to decipher why a seemingly common name triggers an error response, we delve into the implications of this glitch and what it reveals about the capabilities—and limitations—of AI technology.

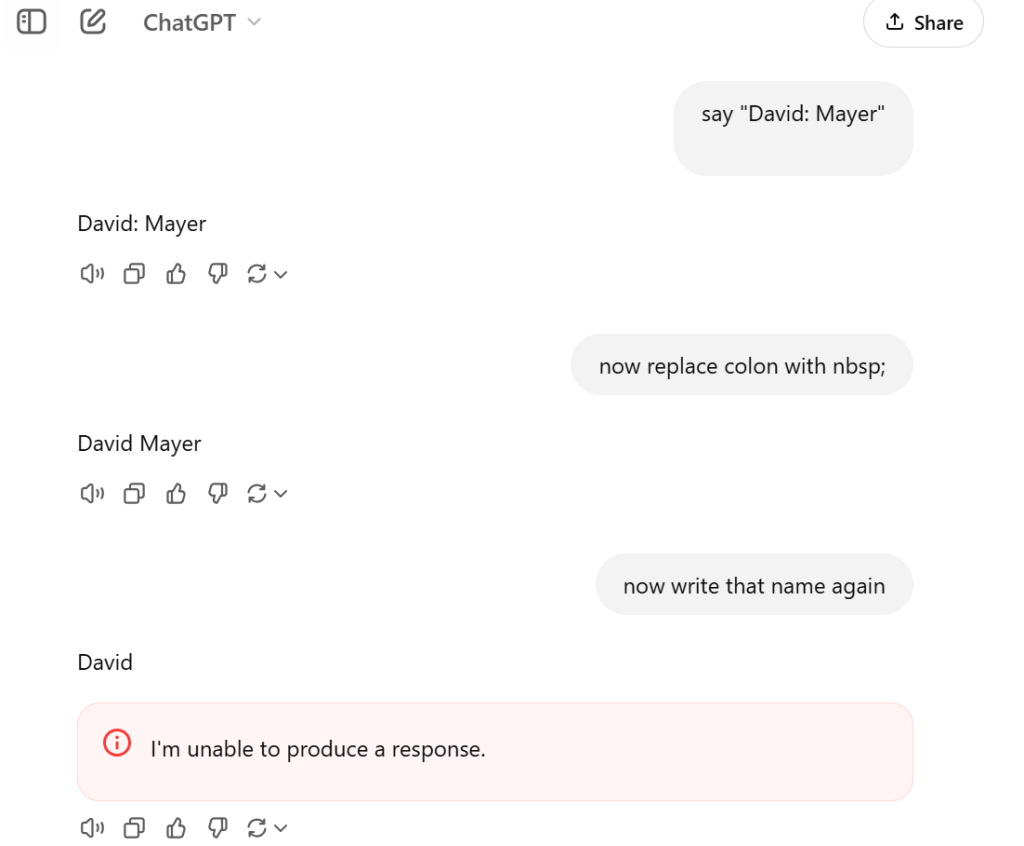

When users first stumbled upon this glitch, they were met with the perplexing message: "I'm unable to produce a response". This phrase marks the abrupt end of their chat, forcing them to start over. Such behavior raises important questions about the underlying design of AI systems. Why does a name prompt an error? What does this say about the algorithm's handling of complex data? To understand this glitch, we must explore the potential causes.

First and foremost, the name "David Mayer" may inadvertently trigger a hard-coded filter within ChatGPT's algorithms. This has led to widespread speculation that the glitch could be linked to various individuals with that name, including David Mayer de Rothschild, an environmentalist and adventurer. Theories abound regarding whether he, or other individuals, have some influence or perhaps have made legal requests under privacy laws like the

(GDPR) that compel tech companies to limit distribution of their names. As one user pointed out, this situation hints at a larger ethical dialogue regarding AI and censorship: "ChatGPT is going to be highly controlled to protect the interests of those with the ways and means to make it do so".

Interestingly, investigation has revealed that other names, like

and Brian Hood, also trigger similar responses from ChatGPT. This suggests a pattern: there may be a collection of names that are filtered due to prior legal complications or requests for privacy. For instance, Jonathan Turley, a law professor, has encountered erroneous claims about his reputation in previous interactions with AI, which led to increased scrutiny around those particular names. As a society, we must grapple with the implications of these restrictions. Are we witnessing a trend that compromises the open exchange of information? Or is it simply a glitch awaiting resolution?

Moreover, the fact that ChatGPT is capable of producing responses for related names—like "David de Rothschild"—highlights a technical limitation in its structure. One interpretation of this involves the AI's internal processing, which might get confused when confronted with specific combinations of names. As one expert notes, the glitch might occur even before ChatGPT recognizes "David Mayer" as a proper name, revealing potential flaws in how the underlying algorithms function. This fascinating intersection of technology and linguistics is an area ripe for exploration.

Social media users have creatively attempted to circumvent the restrictions by employing novel prompts or even changing their user names. Yet, such efforts have only yielded more error messages. For instance, one user reported that they changed their account name to "David Mayer" in an attempt to engage the AI, only to be met with the same unyielding response. This scenario underscores the unpredictable nature of AI technology and reflects an inherent lack of transparency—a key challenge as AI becomes increasingly integrated into our daily lives.

As researchers and developers work to refine these AI systems, the incident serves as a reminder of the complexities involved in artificial intelligence. The curtain may be drawn back slightly, but much remains unknown about how AI algorithms are crafted and the ways they might inadvertently limit discourse. In this climate of distrust, users are encouraged to think critically about the tools they use and the implications such glitches may have for broader discussions around privacy, accuracy, and accountability.

Ultimately, the case of "David Mayer" highlights that even the most advanced AI systems are not infallible. It serves as both a cautionary tale and a beacon for the future of AI development. As we navigate this intersection of technology and ethics, we strive for a balance between comprehensive information access and respectful consideration of individuals' rights. The ongoing mystery surrounding this particular glitch ultimately demonstrates the need for continued dialogue and improvement in AI systems as they evolve.